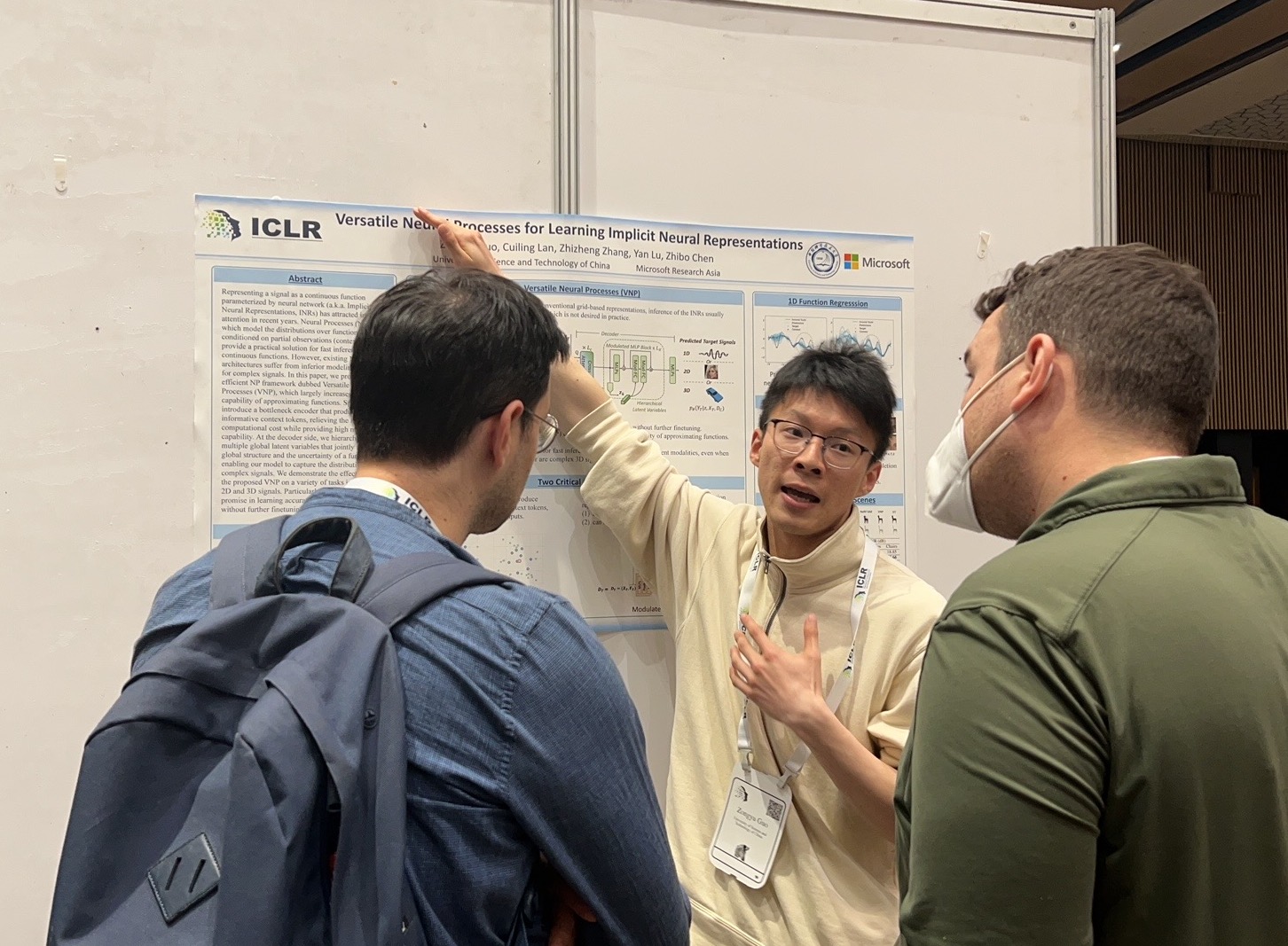

Zongyu Guo (郭宗昱)

I'm now a Researcher at the Media Computing Group in Microsoft Research Asia, Beijing. I received the PhD degree in 2024 at the University of Science and Technology of China (USTC) advised by Prof. Zhibo Chen (Page). Prior to that, I spent my undergraduate years in the Department of Electronic Engineering and Information Science also at USTC, from 2015 to 2019, and was awarded as an Outstanding Graduate.

I was visiting the machine learning group in computational and biological learning (CBL) lab at the University of Cambridge, advised by Prof. José Miguel Hernández-Lobato (Page) and collaborating with Gergely Flamich. W

Feel free to email me if you are interested in my research.

Quick links: Email, Google scholar, Resume。